Data drives businesses, economies, and an entire country – gives direction in forming strategic decisions that matter a lot. As with the digitization era, the need for data that provides valuable information has become more imperative. Therefore, there comes a call for a well-built Data Infrastructure.

The global data visualization market size stood at USD 8.85 billion in 2019 and is projected to reach USD 19.20 billion by 2027, exhibiting a CAGR of 10.2% during the forecast period.

So, data Infrastructure can be seen as a complete technology, process, or a whole set up to store, maintain, organize, and distribute it in the form of insightful information.

It includes the data properties, the organization responsible for its operation and maintenance, and policies and guides that describe how to manage and make the most use of the data.

It is formed through the systematic structuring of data that drives valuable and high-quality insights to reach accurate decisions.

“As Carly Fiorina, says, The goal is to transform data into information, and information into insight.”

And this can be done by a robust Data Infrastructure. So, in this blog you’ll get deep insights into the following topics of data infrastructure:

- Steps to Build a Robust Data Infrastructure.

- Types of Data Infrastructure

- 5 Best Tools for Automation of Data Infrastructure.

- Data Infrastructure example

- Spatial Data Infrastructure

- Data Infrastructure as a Service (IaaS)

So, let’s dive in:

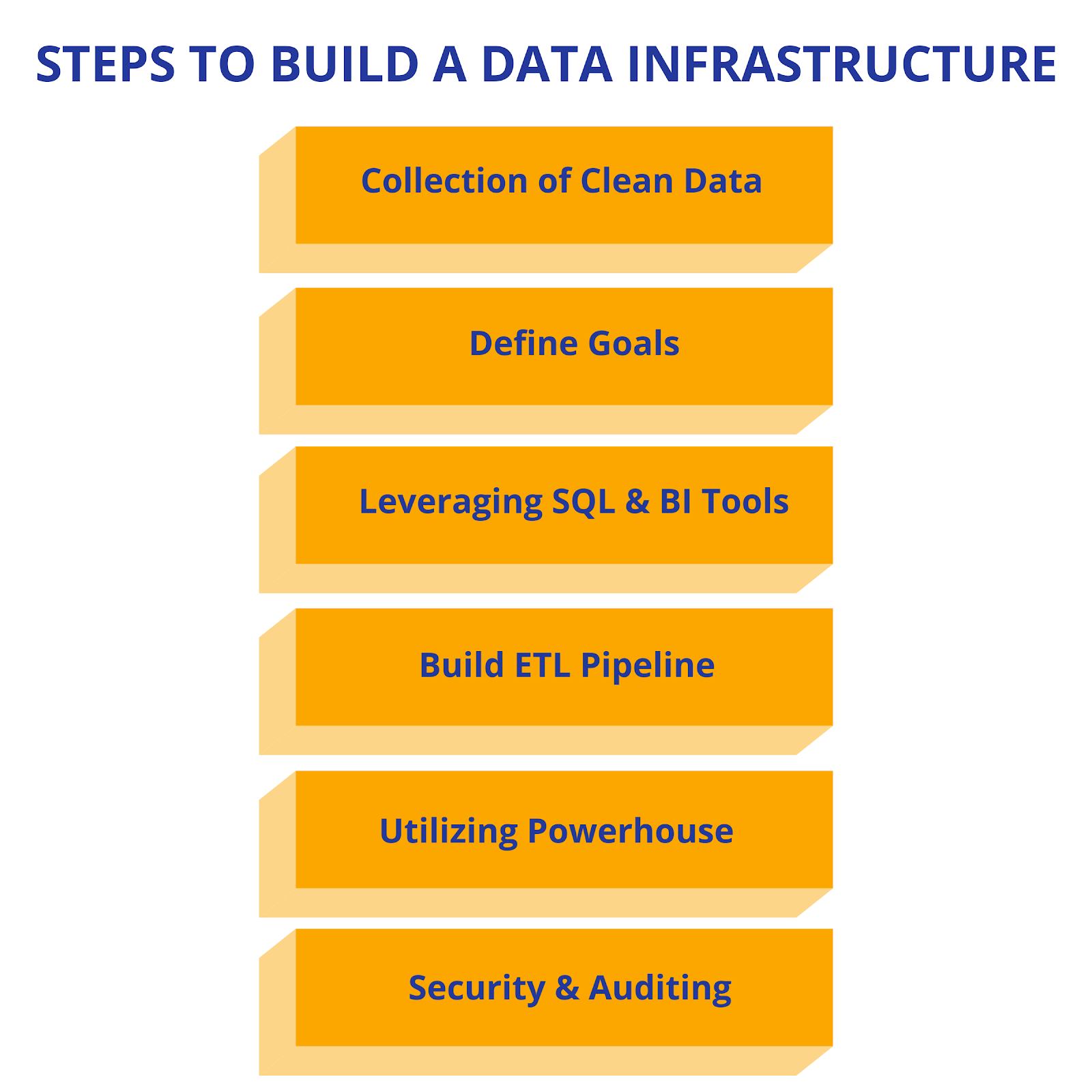

Steps to Build a Robust Data Infrastructure:

-

Collection of Clean Data:

To get the maximum benefits, it starts with collecting clean, good, and right data. Let’s say your sales team got exact data of market statistics. So, now they can build a strategy to maximize the sales where the market coverage is low.

At the end of implementing all the strategies with no results, they got to know they have targeted the wrong market. Therefore, now that is a waste of a bunch of efforts !!!

This shows the importance of collecting the RIGHT data. It gives direction to make correct and informed decisions. Therefore, having a streamlined system for collecting clean data mitigates the risks of skewed or duplicated statistics.

-

Define Goals:

Every business organization has data in various forms. It could have been collected through manual or automated integration. Therefore, to make this process more strongly built, one has to start with defining the business goals.

Are you looking to capture the unexplored markets? Or want to improve the product experience of current customers? So, prioritize your goals to decide which form of data matters to you the most.

-

Leveraging SQL & BI tools

SQL opens up the doorway of your enormous data to your entire organization. It makes data easy to understand to your non-geek technical peeps. In hindsight, it turns everyone into a Query Analysts.

For example, you can set up your primal database on MySQL or PostgreSQL & for NoSQL database ElasticSearch, MongoDB, or DynamoDB are the best in the market.

Next is the Business Intelligence tools that play an integral role in understanding and analyzing the data. For exapmle, a few of the best BI tools are Datapine, Clear Analytics & GoodData.

-

Build ETL Pipeline

As your business flourish, you have to step up a few steps more to accommodate more data effectively. Thus, it is the time to build more levels to your ETL pipeline thus scaling up to turn data into valuable information.

Here your ETL will look like pipelined stages while implementing its three main activities. Firstly, Extraction from sources. Secondly, Transformation into standardized formats. Thirdly, loading it into SQL-iqueryable stores.

-

Utilizing Power Warehouse

The next step is to get all your SQL to a Data Warehouse. The setting up of Data Warehouse is categorized under two different levels. One level is for clean and processed data that projects a better view of the project.

Additionally, another level is for unprocessed that lands into tables directly.

-

Security & Auditing

The final step is to regularly audit the data to strike out the ambiguities. For instance, with BigQuery audit logs you can understand the queries. Therefore, Build a strong authentication and authorization process.

The Row-level security lets the owner restrict access within datasets. Thus, it limits the user access within the datasets itself.

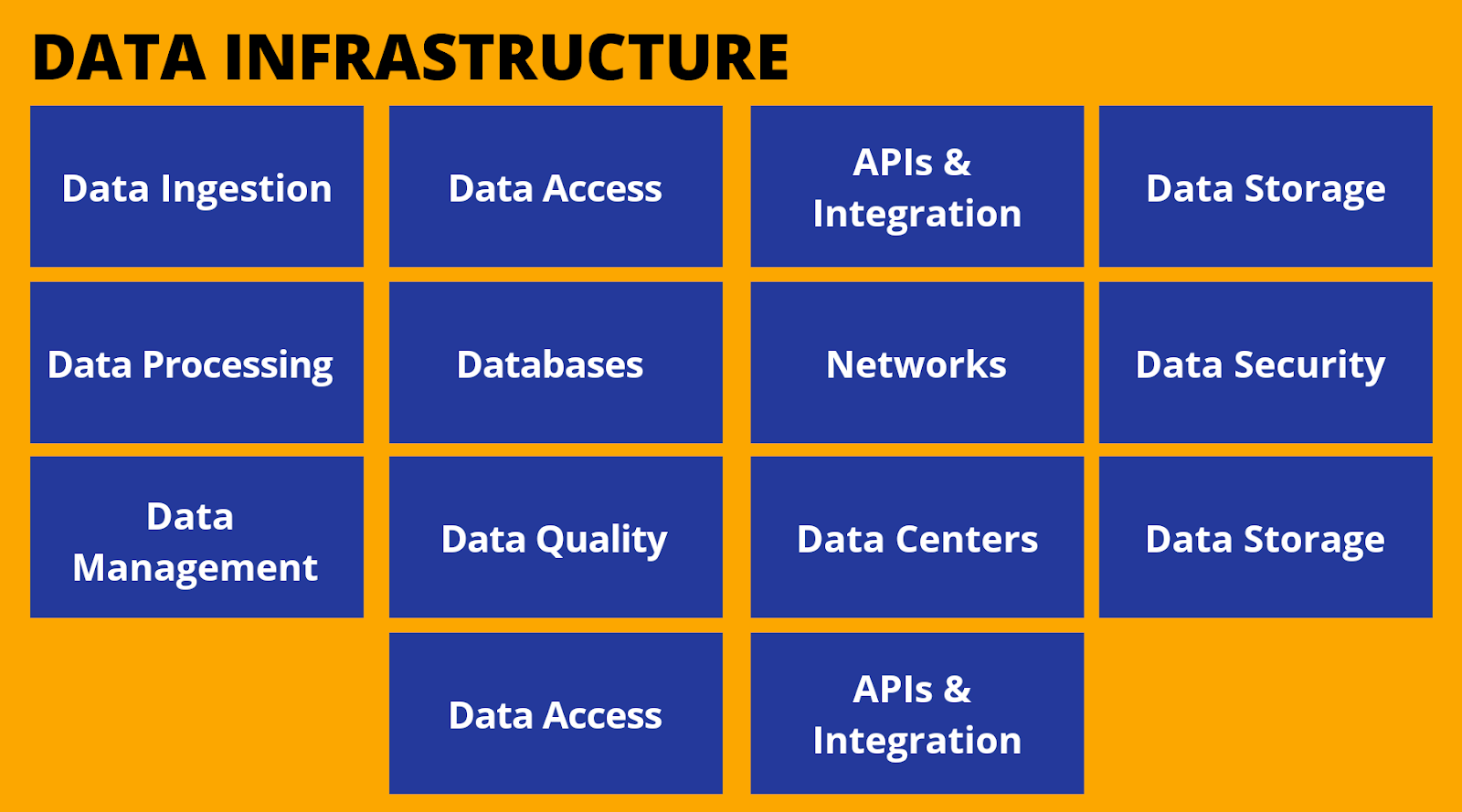

Types of Data Infrastructure:

-

Data Ingestion

It is an infrastructure to transport data from one or many sources to a destination where it can be stored correctly for further data analysis.

Therefore, it starts with the prioritization of data sources, validation of individual files, and routing the flies in the right direction.

-

Data Access

It is an interface to retrieve, modify, copy, or move data from IT systems to the requested access query.

It’s a medium that allows users to get the required data in a systematic and authenticated method. Thus, having the approval of the organization or the owner of data.

-

API Integration

An Interface that processes requests and looks after the seamless distribution of information through systems of the enterprise.

Additionally, it interacts and communicates with backend systems along with various applications, devices, and programs.

-

Data Storage

It refers to the physical retention and storage of data through various equipment and software.

-

Data Processing

An interface to control the data for effective collection and to drive meaningful information. In other words, it starts with the processing of raw data into a readable form of data i.e. information.

-

Databases

Databases are the organized & systematic collection of data that can be accessed electronically through computer systems.

Moreover, these databases are controlled by database management systems & modeled in rows and columns in a series of tables.

-

Networks

It is an interface of connections between computers, servers, mainframes, network devices, peripherals, etc to share data. For example, Internet connects and brings together millions of people in the world.

-

Data Security

It includes all systems, applications, hardware & software for protecting data from unauthorized access. Thus, reducing the risk of data corruption throughout the data lifecycle.

It includes encryption, hashing, tokenization, and key management practices for the comprehensive protection of data across all platforms.

-

Data Management

It involves the collection, retention, and usage of data in a secure and cost-efficient manner. Thus, it aims at an effective optimization for the utilization of data during decision and strategy making.

-

Data Quality

It refers to the condition of pieces of information. So, a data is regarded as high quality when it serves the intended purpose and can describe the real-world construction.

-

Data Centers

It includes the physical facility or a dedicated space of an organization that is responsible for the storage of applications and data.

For example, it includes routers, switches, firewalls, storage systems, servers, application delivery controllers, etc.

-

Data Analysis

It’s a process of intense inspection of data. It helps in further steering the way for transformation and modulation of data for supporting the decision making.

Therefore, it backs the process of discovering valuable information for drawing the right conclusions.

-

Data Visualization

It refers to the representation of data in graphical form. That includes- graphs, charts, maps, etc. It brings forward easy communication of numbers in a graphical manner by building relations among the data.

-

Cloud Platforms

It is a hardware-based operating server that acts as an Internet-based data center for storage and processing.

5 Best Tools for Automation of Data Infrastructure:

-

Ansible

Ansible is an orchestration tool that includes configuration management, application deployment, cloud provisioning, etc. It focuses on every system of IT infrastructure for its effective interaction and management.

Therefore, it can be managed using a web interface- Ansible Tower. In addition to a wide range of pricing models, it provides a user-friendly and easy-to-manage interface.

-

Puppet

Puppet is an IaC i.e. Infrastructure as a Code tool. It lets users define the state they require to form the infrastructure and automating the system. It follows the process to achieve the desired state without any deviations.

For instance, this open-source tool is preferred by big corporations like Dell and Google.

-

Datadog

Datadog is a cloud-based (SaaS) application tool. It is a monitoring tool that provides detailed insights into applications, networks, and servers. Additionally, it provides troubleshooting and detection of problems in the system.

-

Selenium

Selenium lets users create quick bug-fixing-scripts along with automated bug fixing mechanisms.

It is a powerful tool that automates web browsers and tests web-applications. Morever, this open-source software is easy to use & provides great support with extensions as well.

-

Docker

Docker lets the user focus on continuous integration and deployment of code. Its dockerfiles let developers effectively create and manage applications. Likewise, it focuses on an isolated environment for code, system libraries, etc.

Therefore, docker is a great time-saver that enhances productivity along with the easy integration of existing systems.

Spatial Data Infrastructure:

Spatial Data Infrastructure is a data infrastructure framework or a series of agreements on technology standards, institutional arrangements, policies, etc.

Thus, enabling the discovery and use of geospatial information for purposes other than its core purpose for which it was created. Morever, it standardizes the formats and protocols.

The basic components of Spatial Data Infrastructure (SDI) are:

- Software client – to display, create a query, and analyze spatial data.

- Catalog Service – to discover, browse, and query the metadata, spatial services, spatial datasets, or other resources.

- Spatial Data Service – to allow data delivery through the Internet.

- Processing Services – such as datum and projection transformations, or the transformation of cadastral survey observations and owner requests into Cadastral documentation.

- Spatial Data Repository – for storage of data.

- GIS software – for creation and upgradation purpose of Spatial Data.

Besides this, it follows international technical standards for different software component interactions. For example, OGC and ISO are for delivering maps, vectors, and raster data.

Data Infrastructure as a Service (IaaS):

IaaS is an online service that provides quick computing infrastructure and various computing resources through the internet.

Morever, it is one of the three main forms of cloud computing services along with software as a service (SaaS) and platform as a service (PaaS).

IaaS provides a level that is impossible through traditional infrastructures of technology. Additionally, it is a robust platform that offers access to IT resources which is highly expandable as per the requirement of capacity changes.

It’s an ideal model for small, mid-sized businesses to large corporates with heavy workloads and data storage requirements.

For example, a few of the leading IaaS providers are Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform, IBM Cloud, Alibaba Cloud, Oracle Cloud, Virtustream, CenturyLink, and Rackspace.

The key offerings of this technology include- compute resources, storage, networking along with self-service interfaces, web-based user interfaces, APIs, management tools, and cloud software infrastructure delivered as services.

With a great capacity for data storage comes the great risk of security threats and protection.

The infrastructure of the protection system varies in every cloud service however, to some extent it is bound to unforeseen data losses. Despite this, IaaS is on a surge to create an agile and effective IT environment.

Data Infrastructure Example:

Data Infrastructure has evolved tremendously with the upgradation in data assets, organizational models & policies through research.

For example, Crossref and Datacite are non-profit membership organizations that provide unique identifiers for datasets and research papers.

CrossRef’s recent project is creating identifiers for the research ecosystem by licensing and making the best use of open data from social media platforms for analysis of scholarly research

Take Away:

Data Infrastructure has evolved greatly from big cluster data of Hadoop to Spark program which has become the replacement of the former. Thus, it can be what exists in data centers or could be what you see as online cloud systems.

The existence of data infrastructure is to enable, protect, preserve, and serve the applications that convert data into valuable information. Therefore, it comes with the high potential growth of data infrastructures to meet arising technology changes.